This week I was researching AI models to implement for the different modalities. More specifically, Mammography. I also finally had the opportunity to talk to my mentor on Thursday (June 1st, 2023).

You can find the recorded meeting link here.

Building a Breast Cancer Classification Model from Scratch

At the start of the week, I attempted to implement a VGG16 classifier to distinguish between malignant and benign calcifications in a mammogram. Using PyTorch, I developed the model and achieved a validation accuracy of approximately 70%. The source code can be accessed here. This model and code serve as a starting point for implementing a more complex model mentioned in this paper. Briefly, the paper discusses building a model solely on the region of interest (ROI) of the calcification/tumor and adding layers and training on the entire images. This method has significantly reduced the training loss and, consequently, improved overall accuracy.

Mentor Discussion and Change in Direction

I was able to have a discussion with my mentor Dr. Saptarshi this week. I was guided to first start with implementing a hook in the OHIF extension which based on the image modality calls the respective AI model. After that we continue with the AI model implementation activities.

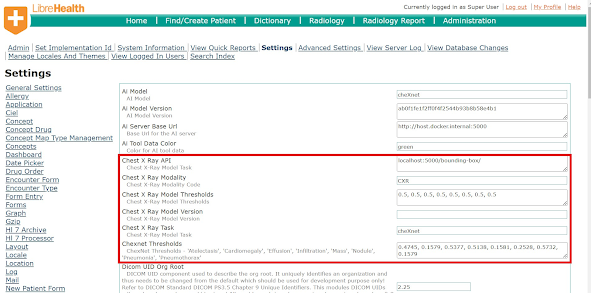

Firstly, we decided to alter the administration settings by incorporating different imaging modalities (e.g., Mammo, Head CT) and the subsequent tasks (such as segmentation, classification) available under them. When a user opens an image through the OHIF viewer, the application reads the modality from the DICOM metadata and calls the corresponding task. For instance, the modality Head CT could have tasks/models like tumor detection, segmentation, etc. If a user selects the task 'tumor detection' for a Head CT modality and opens a Head CT image in the OHIF viewer, the application will read the modality from the DICOM metadata and call the respective task/model from the AI model service as set in the settings.

To implement this I will first have to create a call back API that collects data on available modalities, models and their tasks from the AI model service. The information gathered will be filled automatically in the administration settings. Based on my Mentor's advice I will first start creating a list of issues that I will need for implementing the call back function, get them reviewed and update them to the repositories issues list. Each issue will need to explain one logical unit of work. Post this will follow implementation.

For implementing the hook, I will have to work with both JS and Java code. I'm fairly new to Java, although I do have some experience with JS. I discussed this with my mentor, ensuring him that I would learn and put in the necessary efforts to complete this phase, even though I may require his assistance a bit more frequently. He agreed that it's preferable to tackle the more challenging aspects first, and I agree.

At present, I am in the process of creating the issues and delving into the code to understand it. See you next week.

Comments

Post a Comment